Minimal Abstraction, and CS Amnesia

Here’s a trip down memory lane. I was reading Stephan Schmidt’s essay on Radical Simplicity, found it quite agreeable and myself nodding hard, and had a strong sense of déjà vu. Those thoughts sounded familiar. Mind you, not in a plagiatory sense at all - I was actually really happy to see that, years and who knows what amount of distance apart, we had come to similar conclusions.

I’d like to share two posts I wrote on a long defunct blog, back in 2009. The first is about a concept I like to call (and have always called) “minimal abstraction”, which is very very similar to Radical Simplicity; the second is a somewhat necessary prerequisite and prequel to the first. I reproduce them here without changes, some comments are added [in square brackets], and I’ve provided better links to other places where possible.

I find it interesting how my thinking hasn’t changed much in the 14 or so years since I wrote these things. If anything, I’ve become more grumpy about those things, because several cycles of the criticised nature have happened since.

Minimal Abstraction

Ask yourself: don’t you often have the feeling that your brand-new 1024-core desktop SUV with 4 TB RAM and hard disk space beyond perception takes aeons to boot or to start up some application? (If the answer is no, come back after the next one or two OS updates or so.)

I don’t want to rant about any particular operating system or application — the choice is far too big. Still, honestly, one thing I am often wondering about (and I guess I’m not all alone) is why modern software is so huge and yet feels so slow even on supposedly fast hardware.

All those endless gigabytes of software (static in terms of disk space consumption and dynamic in terms of memory consumption) and all those CPU cycles must be there for a purpose, right? And what is that purpose, if not to make me a, well, productive and hence happy user of the respective software? There must be something wrong with complexity under the hood.

During that conversation [see below] about Niklaus Wirth with my friend Michael Engel in Dortmund which got me started about computer science’s tendency to regard its own history too little, and during which I had also mentioned my above suspicion, Michael pointed me to an article by Wirth, titled A Brief History of Software Engineering, which appeared in the IEEE Annals of the History of Computing in its 2008 July–September issue. This article contains a reference to another of Wirth’s articles titled A Plea for Lean Software, which was published in IEEE Computer in February 1995.

The older article phrases just the problem I pointed out above, in better words than I could possibly use, and it did so more than a decade ago. So it’s an old problem.

Here’s a quotation of two “laws” that Wirth observes to be at work: “Software expands to fill the available memory. … [It] is getting slower more rapidly than hardware becomes faster. …” According to Wirth, their effect is that while software grows ever more complex (and thus slow), this is accepted because of advances in hardware technology, which avoid the performance problems’ surfacing too much. The primary reason for software growing “fat” is, according to Wirth, that software is not so much systematically maintained but rather uncritically extended with features; i.e., new “stuff” is added all the time, regardless of whether the addition actually contributes to the original idea and purpose of the system in question. (Wirth mentions user interfaces as an example of this problem. His critique can easily be paraphrased thus: Who really needs transparent window borders?)

Another reason for the complexity problem that Wirth identifies is that “[t]o some, complexity equals power”. This one is for those software engineers, I guess, that “misinterpret complexity as sophistication” (and I might well have one or two things in stock to be ashamed about). He also mentions time pressure, and that is certainly an issue in corporate ecosystems where management does not have any idea about how software is (or should be) built, and where software developers don’t have any idea about user perspective.

I must say that I wholeheartedly agree with Wirth’s critique.

Digression.

Wirth offers a solution, and it’s called Oberon. It’s an out-of-the box system implemented in a programming language of the same name, running on the bare metal, with extremely succinct source code, yet offering the full power of an operating system with integrated development tools. One of the features of the Oberon language, and also one that Wirth repeatedly characterises as crucial, is that it is statically and strongly typed.

Being fond of dynamic programming languages, I have to object to some the ideas that he has about object-oriented programming languages and typing.

“Abstraction can work only with languages that postulate strict, static typing of every variable and function.”

“To be worthy of the description, an object-oriented language must embody strict, static typing that cannot be breached, whereby programmers can rely on the compiler to identify inconsistencies.”

Well, no.

My understanding of abstraction (and not only in programming languages) is that it is supposed to hide away complexity by providing some kind of interface. To make this work, it is not necessary that the interface be statically known, as several languages adopting the idea of dynamic typing show. Strict and static typing in this radical sense also pretty much excludes polymorphism, which has proven to be a powerful abstraction mechanism. (Indeed, Wirth describes what is called “type extension” in Oberon, which is called “inheritance” elsewhere.) It is correct that static strict typing allows for compilers to detect (potential) errors earlier, but abstraction works well and nicely with languages that don’t require this.

It is puzzling to read that an OOP language must be statically and strictly typed to be rightfully called an OOP language. Ah, no, please, come on! Even as early as 1995, there were programming languages around that one would have greatest difficulties to classify as not being OOP languages in spite of their being dynamically typed. Moreover, it is an inherent property of living systems (which the object-oriented paradigm has always strived to capture) that objects in them assume and abandon roles during their lifetimes—something which to capture statically is hard.

Finally, it is interesting to note that the successor of the Oberon system [a system called Bluebottle; the link to the original page is defunct] features a window manager that supports possibly semi transparent windows. Do you see the irony in this?

End of digression.

As stated above, I really share Wirth’s opinion that there is too much complexity in software, and I believe this is still true today. What can be done about it? Regarding operating systems, we depend on diverse device drivers even more than a decade ago, so we need a certain degree of abstraction to allow operating systems to talk to different hardware. Regarding convenience and user experience, the occasional bit of eye candy makes working with systems undoubtedly more comfortable. We should still ask ourselves whether it’s really, really necessary though, and perhaps concentrate on the really important things, e.g., responsiveness.

So what to do? I don’t really have a definitive answer, but I believe that the idea of minimal abstraction is worth a look. The “minimal” in the term does not necessarily mean that systems are small. It means that the tendency to stack layers upon layers of software on top of each other is avoided.

Minimal abstraction is the principle at work in frameworks such as COLA (a tutorial is [no longer, sorry] available or in the work on delegation-based implementations of MDSOC languages I kicked off with [the late] Hans Schippers. I also believe that the elegance and (in a manner of speaking) baffling simplicity of metacircular programming language implementations (more recently, such as Maxine) are definitely worth a look.

I am sure it is possible to avoid complexity as we have to observe it today, and to make software more simple, better understandable and maintainable, and I believe the above is a step in that direction.

Computer Science and “Geschichtsvergessenheit”

Yesterday, a friend working at a German university told me over ICQ that for most of his students the name Niklaus Wirth didn’t ring a bell. I was mildly shocked, and we ranted (ironically) a bit about today’s students’ being undereducated and ignorant and all. Eventually, we came up with a quickly and superficially assembled list of some more persons that we think one should know if they’re into computer science: Alan M. Turing, Grace Hopper, Ada Lovelace, Edsger W. Dijkstra, David L. Parnas, and Konrad Zuse. Some of these might have been chosen based on personal preference, but most of them undoubtedly have made significant contributions to computer science.

Let’s face it: the practical outcomes of academic computer science tend to reoccur in cycles. Distributed systems of yore are somehow residing in the SOA/grid/cloud triangle these days, and concepts that have long been known are re-introduced and hyped with all the marketing power of globalised corporations. While this is typical for industry, it’s unsettling that academia jumps on the bandwagon almost uncritically, generating massive amounts of publications at high speed that don’t actually tell anything new. The seminal papers that have been published, in some cases, decades ago are mostly not even referenced in these.

I believe this is not a deliberate choice of the authors of said papers. Any academic worth their share will strive to give relevant related and previous work due credit. So what is it that brings this about? Why is computer science so geschichtsvergessen (unaware, if not ignorant, of (its own) history)?

Is it because many, too many, universities focus on teaching students

the currently hyped programming language? Is it because education at

academic institutions too often and too strongly concentrates on

creating industry-compatible computer scientists operators?

Is it because computer science education is not designed to be

sustainable?

The above questions can, more or less obviously, be answered with yes — and that is sad. Not because students don’t know the names of people that helped shape computer science in its early days; that, one could do with. It is much more problematic that ignorance (be it deliberate or not) of previously achieved important, crucial results leads to too much work being done over and over again. It’s reinventing the wheel on a large scale.

Most academic disciplines I know of introduce their students to the historical background and development of their subject early in the curriculum. Students of economic science learn about mercantilism, Smith, Keynes, and Friedman early on; and prospective jurists are soon faced with the Roman legal system and its numerous influences on contemporary legal systems. Why does a computer science curriculum start, ironically exaggerated, with a darned Java programming course?

It’s the teachers’ job to change this. Still, they often themselves don’t know their ancestors (and I am not an exception myself). Information is available. Two pointers that spring to mind are these:

Friedrich L. Bauer’s small volume “Kurze Geschichte der Informatik” (sorry, I don’t know if it’s available in English) connects computer science to its roots in mathematics and philosophy and depicts its historic development until the early 1980s (sadly, it stops there).

The volume “Software Pioneers” edited by Manfred Broy and Ernst Denert collects reprints of seminal papers by various computer science pioneers. It comes with 4 DVDs (!) containing videos of talks of most of these persons, who were gathered at a Software Pioneers Conference in Bonn (Germany) in 2001.

Please, let’s not forget where we come from, aye?

Tags: hacking

Markdown Links in MacVIM

Some text editors have that nice feature that if you want to turn a span of text into a link, you select it, and when you then paste a URL, the editor will create that link in place. My favourite text editor is MacVIM, and I thought it’d be nice to have that feature there as well. Since most of the text I edit in MacVIM is Markdown, selected text needs to be converted into a link following the syntax rules.

Now, MacVIM can be extended (of course). As I didn’t know the language well enough, I thought I’d vibe code it with some help from Claude Sonnet, which I use in my Langdock workspace. (To be clear, I did not use Claude Code, but plain old Claude with a canvas.)

Because I wanted to be able to understand (and assess the quality of) what would come out of this, I started by reading a nice and compact primer on the vim language. That didn’t take long, and after a brief pause to appreciate the quirkiness of the language, I got started.

Phase 1: Building the Thing

The first result looked right. However, it didn’t work at all - hitting Cmd-V on selected text with a URL in the clipboard just pasted the URL instead of the selected text, which was the normal behaviour I had intended to replace.

A few exchanges later, during which Claude gave rather helpful advice

for troubleshooting and debugging, and even added code to produce

debugging output, I realised that I had made a mistake in my first

prompt: I had mentioned I wanted a vim plugin, but had not

mentioned I was using MacVIM. That got me much closer to the goal.

Phase 2: Shrinkwrapping the Thing

I ended up with a version of the plugin that did what I wanted, however it still produced debugging output. So I instructed Claude to take away all the fluff and be minimalistic about the original requirement.

The result fell back to just replacing selected text with the pasted URL. In shrinkwrapping the plugin, Claude had also removed MacVIM specifics that were required to make it work. One instruction later, that was fixed.

However, now it didn’t get the spaces around the freshly created link right. That took one more round, after which pasting of non-URLs was broken. One instruction later, the plugin suddenly contained a syntax error. Once that was addressed, the thing worked as expected (for good).

Phase 3: Understanding the Thing

I don’t think vibe coding should be a discipline where humans delegate everything lazily to an LLM. So I started a new Claude session, fed it the code, and instructed the model to explain it to me. With my entry-level understanding of the vim language, I should be able to appreciate what was going on. That worked well.

Finally, I asked the second Claude session what could be improved about the code. Because LLMs are “eager to please”, I instructed it to avoid digging for “improvements” just for the sake of it, but to apply a fair assessment.

The result was good; there was one actual duplication that made no sense, which hadn’t met my eye and which I subsequently removed. I chose to not adopt another suggestion about removing code duplication for register saving-and-restoring and replacing it with an extra abstraction.

Show me the Code!

All right, all right, here it is. Needless to say, the Markdown source of this post contains several links, all of which were created using the help of this plugin.

Tags: hacking

ssh Tips

Most of us technical people are using ssh in one way or another. I came across a posting that laid out some of the features of ssh for me in a very clear way, and gave me some insights into features I hadn’t in my active working memory. Here it is.

Tags: hacking

Smalltalk, Anyone?

The Smalltalk programming language is a wonderful tool for “pure” object-oriented programming. It goes back to the 1970s; the first official release was in 1980. Smalltalk came with a full-fledged graphical user interface, and an integrated development environment. Its debugger allowed for changing code of the running system on the spot, without having to restart everything, even in the middle of running a test suite. (If that makes you shrug, remember this was in 1980.)

There are multiple implementations of the language and environment still. The one I’d recommend - and that helped me earn my doctorate and subsequent postdoc position - is Squeak. It was written by a team around the original inventors of Smalltalk.

In case you’re interested in getting a different idea of what object-oriented programming in a live environment can be, check out Squeak, and the free book Squeak by Example. The latest release PDF is here.

Tags: hacking

Self-Hosted News Aggregation

RSS is highly useful to keep track of news sites and blogs. I’ve been using a TinyTinyRSS (tt-rss) instance hosted in my personal web space for many years. When I started using it, tt-rss software supported the Fever API, which my preferred reader (Reeder) connects to. All of tt-rss is written in PHP.

When my web space hoster decided to put the (somewhat oldish) PHP version my tt-rss instance was running on under a paid maintenance plan, it was time to update tt-rss to the latest version. However, the manual installation was no longer supported. Docker was the new preferred way now. Since my hoster package didn’t include Docker support, I decided to move the tt-rss instance to my Oracle Cloud tenancy (the always-free tier is really quite usable), where I wanted to use a Linux VM to run things.

Once I had gotten it all up and running, I discovered that the Fever API support had been discontinued. Also, none of the (many) other APIs that Reeder can connect to were supported by tt-rss. At this point, I have to mention that the tt-rss maintainer doesn’t really care much about users: “we have an API of our own, just use that” is a standard response to questions about this matter. While I have great sympathy for OSS projects (and support some financially), I also have little tolerance for that kind of attitude.

Consequently, another RSS aggregator was needed. The relevant ones are all written in PHP, for some reason, which isn’t really my preferred language, but if it’s tucked away in a neatly packaged Docker configuration, why not give it a try?

I ended up installing FreshRSS, and it is really quite convincing. It imported my feeds right away, happily keeps them updated, and Reeder can connect to it easily. Things are good, and I don’t have to pay my web hoster to keep an old PHP version alive for me.

The setup I chose involves the FreshRSS Docker image, and a PostgreSQL 14 one. That turns out to be a bit of an overkill for the tiny VM I’ve got running in OCI: it only has 1 GB of memory, and the sync with Reeder is a bit on the slow side because of constant swapping. Happily, FreshRSS also comes with an option to run using SQLite, which is as lean-and-mean as it gets. I’ll set up the thing again with that as the database backend.

Tags: hacking, the-nerdy-bit

Forth in Hardware

My interest in the Forth programming language is no secret. Imagine my amazement when a friend pointed me to this little piece of goodness. It goes without saying that I’m going to need a soldering iron soon: the My4th machine is so basic that you can’t purchase it assembled, but have to get the parts and assemble it yourself. Fun!

Tags: the-nerdy-bit, hacking

"Ur-"

The author of this blog post has assembled a list of seven programming languages he dubbed “_ur-_languages”. To qualify for inclusion, a language should embody a coherent group of paradigmatic concepts in a pure form. That is, inclusion in the list is not based on historical merit (having been the first or earliest) but rather on purity of concept. It’s a bit odd to use the prefix “ur-”, which has a strong historical connotation. The list on its own works well: the examples it gives do indeed embody the respective paradigms in a very pure form.

To illustrate, I had initially stumbled over Smalltalk or Simula not being mentioned as the _ur-_languages for object-oriented programming. The list mentions Self instead. Given the purity perspective, this makes sense: there is nothing that could be taken away from Self, it’s minimalistic yet rich. It allows for all the flavours of OOP to be implemented with elegance and ease.

And still, “ur-” ... the author’s intent would perhaps be better reflected by picking a word that expresses peak purity, rather than one that suggests historical earliness.

Tags: the-nerdy-bit, hacking

Mini Profiler

Profilers are power tools for understanding system performance. That doesn’t mean they have to be inherently complicated or hard to build. Here’s a great little specimen, in just a couple hundred (plus x) lines of Java code, including flame graph output.

Tags: hacking, the-nerdy-bit

Old Standard Textbook, Renewed

Back in university, one of my favourite courses was the one on microprocessor architecture. One of the reasons was that the lab sessions involved building logic circuits from TTL chips with lots of hand-assembled wiring (imagine debugging such a contraption). Another reason was the textbook, which explained a RISC processor architecture (MIPS) in great detail and with awesome didactics. This book was “The Patterson/Hennessy” (officially, it goes by the slightly longer and also a bit unwieldy title “Computer Organization & Design: The Hardware/Software Interface”).

This was the Nineties. Fast-forward 30 years to today, and we have a new and increasingly practical RISC architecture around: RISC-V. The good thing about this architecture is that it is an inherently open standard. No company owns the specification, anyone can (provided they have the means) produce RISC-V chips.

Guess what, that favourite book of mine got an upgrade too: there is a RISC-V edition! This means that students, when first venturing into this rather interesting territory, can learn the principles of CPU design on an open standard basis that is much less convoluted than certain architectures, and - unlike certain others - entirely royalty-free.

Makes me want to go to university again ...

Tags: the-nerdy-bit, hacking

PlanckForth

I had shared some discoveries I had made in Forth territory. Since these systems of minimal abstraction really fascinate me, I took a deep dive into the PlanckForth implementation and dissected the 1 kB ELF binary down to the last byte.

This is indeed a tiny Forth interpreter that does just the most basic things in machine code. It provides the foundation for a very consequential bootstrap to be executed on top of it. The bootstrap builds the actual Forth language from first principles in several waves.

In case you’re interested, here are the binary source and bootstrap with my comments. Note that I stopped commenting in the bootstrap once it gets to the point where the minimal Forth supports line comments.

What next? Part of me wants to build a handwritten RISC-V binary for the basic interpreter ...

Tags: the-nerdy-bit, hacking

More Forth

The Forth programming language is a thing I’ve previously written about. I recently came across this little gem of a talk, wherein the author points to some interesting resources about Forth. In particular, the slides put emphasis on how minimal a Forth implementation can be.

The point about Forth is that it heavily depends on threaded code. That’s a simple technique to implement an interpreter on the bare metal. In a nutshell, code to be executed by a threaded interpreter is mostly addresses of implementations of logic, that the very simple interpreter “loop” just loads and jumps to, following a “thread” of addresses through its implementation.

Indeed, Forth source code consists of just “words” that are concatenated to express semantics. These human-readable words are mere placeholders for the memory addresses where the words' implementations can be found. In other words, a Forth compiler is simplistic: it boils down to dictionary lookups.

To demonstrate the minimalism, the PlanckForth interpreter is just a 1 kB hand-written ELF binary. The syntax of the basic language this interpreter understands is a bit awkward, but sufficiently powerful to bootstrap a full-fledged Forth in just a couple hundred lines.

JonesForth is a richly documented Forth implementation. (You’ll have to go through the snapshot link to get the source code.) Reading the code is highly instructive.

Tags: the-nerdy-bit, hacking

Historical Source Code Every Developer Should See

This piece lists some historical source code that today’s engineers, in the opinion of the author, should read and know. The artifacts in question are the WWW demo source code, an early C compiler, pieces of the early Unix research code bases, the first Linux release, and Mocha, the first JavaScript engine.

While all of these (maybe, and forgive the snark, with the exception of Mocha) are relevant, I cannot help but wonder about the author’s rationale for choosing these particular specimen. The author argues these “built the foundation of today’s computer technology”. I beg to differ.

Graphical user interfaces are quite a thing in today’s computer technology, one should think. These owe a lot to BitBlt, the Xerox Alto machine, and, consequently, Smalltalk (that programming language had an IDE with a GUI back in the 1970s). So, for source code, I’d add to the list this memo from 1975 describing BitBlt, and the Computer History Museum’s page on the Xerox Alto source code.

Tags: hacking, the-nerdy-bit

Interpreted or Compiled?

What would you say: is Java an interpreted or a compiled language? What about C, C++, Python, Smalltalk, JavaScript, APL?

Laurie Tratt has written two (1, 2) absolutely wonderful (read: scientifically and technically nerdy) blog posts about the matter. He uses the Brainfuck programming language (it is less NSFW than the name suggests, but I will continue to use its name in abbreviated form, i.e., “bf”) and a series of implementations thereof that increasingly blur the boundary between interpreted and compiled.

The blog posts resonate with me especially because I use bf myself whenever I want to learn a new programming language: I just write an interpreter (or compiler?) for bf in that language.

These are two rather entertaining reads, and they will leave the reader in a better shape for the next inevitable discussion of whether this or that language is interpreted or compiled.

Tags: hacking, the-nerdy-bit

Array Programming on the Command Line

Having spent most of my adult computing life in Unix-derived operating systems, I’m quite fond of having a good command line interface (vulgo shell) available at all times. The Unix command line tools are small but powerful commands that are very well tailored to do one kind of thing. It is a definitive upside that they all work on one common shared representation of data that is passed (or “piped”) between them: text.

Text typically comes in lines, and processing line-based content with tools like grep, less, head, sort, uniq, and so forth is great. Editing data streams on the fly is possible using tools like sed, with just a bit of regular expression knowledge.

When data becomes slightly more two-dimensional in nature - lines are broken down in fields, e.g., in CSV files -, awk quickly steps in. Its programming model is a bit awkward (dad-joke level pun intended), but it provides great support for handling those columns.

Two-dimensional data like that can sometimes come in the form of matrices that may have to be pushed around and transformed a bit. Omit a column here, transpose the entire matrix there, flip the two columns yonder, oh, and sum the values in this column please. While this is possible using awk, its programming model is a bit low level for those jobs.

Enter rs and datamash.

The rs tool, according to its manual page, exists to “reshape a data array”. As mentioned, those Unix command line tools do one thing, and do it very well - so here it is. It’s a wonderful little subset of APL for two-dimensional arrays.

Sometimes reshaping isn’t enough, and computations are needed. Like rs, datamash is a nice little subset of APL, only this time not focused on reshaping, but on computing. To be fair, the capabilities of datamash also cover those of rs, but while the latter is often part of a standard installation, the former requires an installation step. (This may change in the future.) With datamash, numerous kinds of column- and line-oriented operations are possible.

These tools are two less reasons to fire up R or Excel and import that CSV file ...

Tags: hacking

In Touch With Old Languages

This list of the ten most(ly dead) influential programming languages is a fun trip to olden times. Even though I’m not old enough to have used COBOL “back in the day”, no less than four of these languages play quite important roles in my life.

My first programming language was indeed BASIC, and the one I learned most with during my early programmer days was PASCAL. While I don’t have any emotional attachment whatsoever to the former, I fondly recall learning about structured programming in the latter. The BASIC dialects I had used solely relied on line numbers, and consequently calling subroutines had to happen using GOSUB plus line number. Parameter passing and returning results was awkward. PASCAL with its named procedures and functions made such a tremendous difference - it was a true relief. (Remember: this was the late Eighties / early Nineties of the past century.)

Java happened in 1995, and I was an early adopter at university. I came across true object orientation only in 2003 or so, when I first looked into Smalltalk as part of my doctoral research. That was really eye opening. If I had to pick one programming language to spend the remainder of my programming work in, it would be Smalltalk. There simply is no other language that molds programming environment, application, and runtime environment like that.

Finally, APL became important in around 2014, when I was looking into optimised implementations of array programming languages as part of my work on an implementation of the R programming language. The loop-free way of programming with large vectors and (sometimes multi-dimensional) matrices was another eye opener. Also, the quirky syntax and terseness made APL appealing to this programming languages nerd.

The list features six more. I’ve heard / read about them all for sure. If I had to pick one to look into next, it would be COBOL, simply because it’s still around and running a lot of production systems around the world.

Tags: the-nerdy-bit, hacking

Squeaking Cellular Automata

My favourite programming language is Smalltalk, and I used to do a lot of work in the implementation of the language named Squeak, back in my post-doc days. Over the years, I’ve gone back to Squeak on and off, to do some little fun projects. Some time ago, I dabbled a bit with its low-level graphic operations to implement some machinery for playing with cellular automata (more precisely, elementary cellular automata).

Building this was a nice and refreshing experience applying TDD, die-hard object-orientation, some extension of the standard library (heck yeah!), and live debugging. Especially the latter is not easily possible in languages other than Smalltalk, where the IDE and runtime environment are essentially the same.

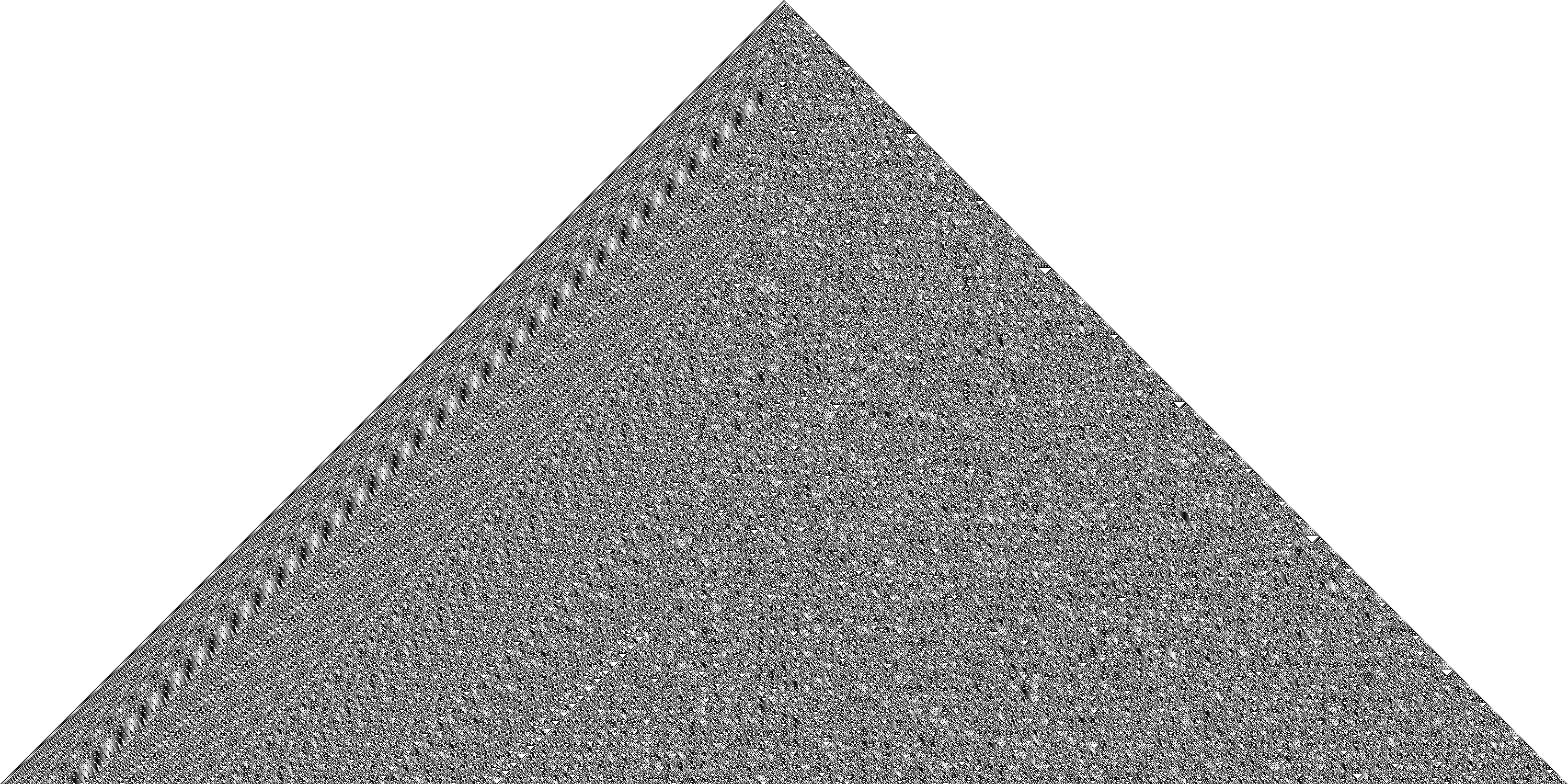

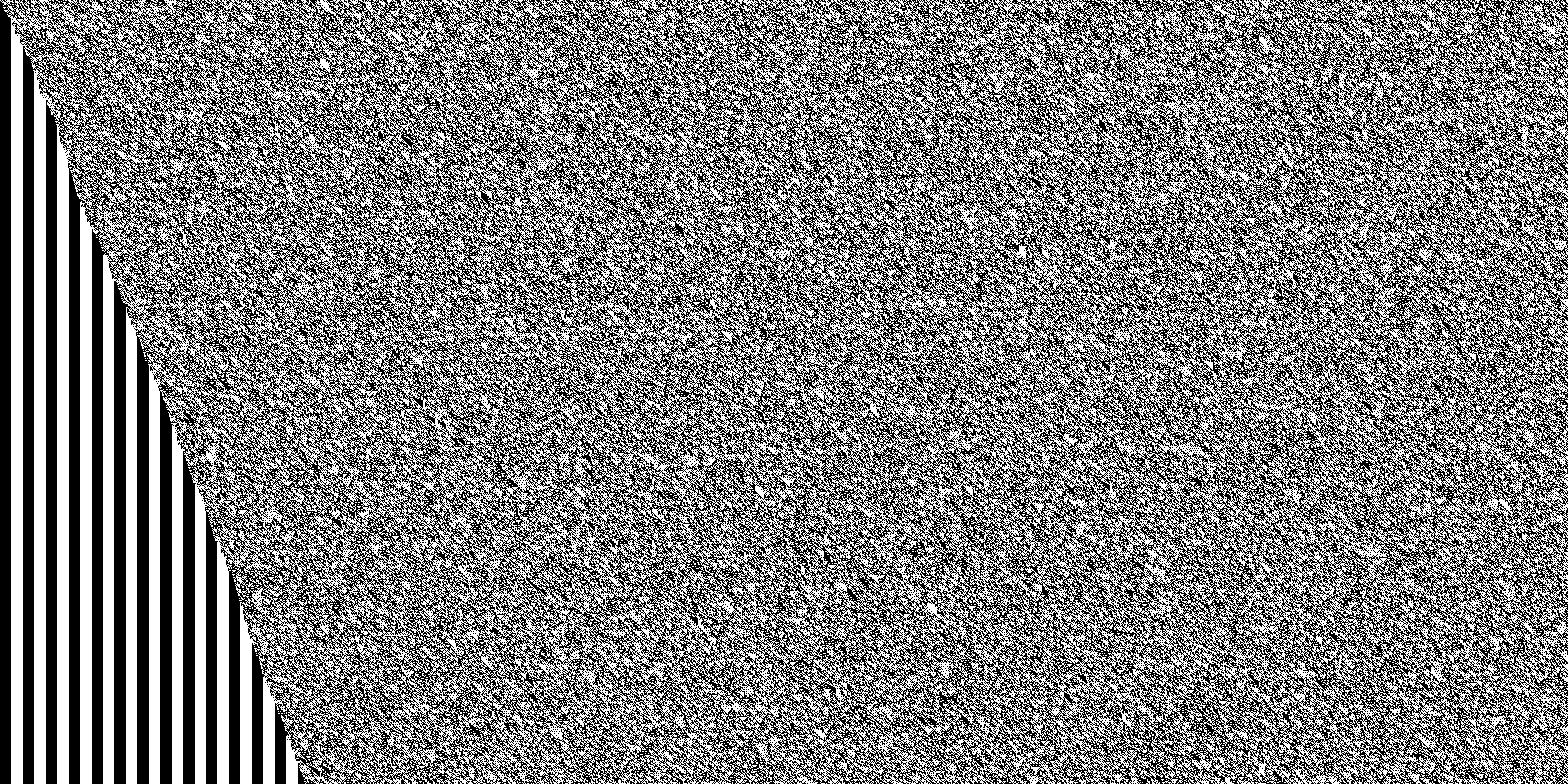

Eventually, I was able to generate some images for the rule 30 cellular automaton, which exhibits a nice dichotomy of order and chaos. Here are two examples, one for the standard initial generation with just one pixel set, and one for a random initialisation. It’s interesting to see how order seems to prevail on the left-hand side, while the right-hand side looks more chaotic (there are patterns there though, if you look closely).

The source code is here, in case you’re interested.

Tags: hacking, the-nerdy-bit

Time Complexity

Time is a really complex topic. More complex than you think. No, really: even if you’ve been looking into time zones and calendar management, there’s probably a subtlety or two you haven’t come across yet. I found this piece a fascinating (and, at times, chilling) treasure trove.

Tags: hacking, the-nerdy-bit

A Radio Show on the History of Programming Languages

(Warning: the links in this post all go to German content, and I don't know if all of them work for readers outside Germany.)

My favourite radio station, Deutschlandfunk, has a thing they call “long night” ("lange Nacht") where they devote almost 3 hours to one topic. These shows air on weekends, and obviously so late that it’s neither easy to note them happening, nor to listen through without falling asleep. Thankfully, DLF has an audio archive where most content is kept available for some months after broadcasting. Note that this is all free content if you live in Germany (because you’ve already paid).

Here’s one on the history of programming languages. It appears this is a re-cast (maybe modified?) of content from 2019 the complete script for which you can read here.

Tags: hacking, the-nerdy-bit

BASICODE

You’ve probably never heard of BASICODE, even if you know one or two programming languages, and even if you know the BASIC programming language. I recently heard about it for the first time, and was surprised and pleased to hear that this existed.

BASICODE started when several nerds in the Netherlands wanted to exchange computer programs written in the BASIC programming language among themselves. Initially, it was a way to encode computer programs as sound, to store them on cassette tape. (This was very common before floppy disks, then CD-ROMs, then USB sticks became popular. If you know more than one of these, thank you.)

So far, so good, however these folks soon found out that being able to transfer code between machines wasn’t everything there was to it. The BASIC dialects on the different machines had different ideas of how to do graphics, sound, I/O, and so forth.

They decided to overcome this by defining a set of subroutines (callable with the common GOSUB statement) for these kinds of things, as well as a set of conventions for how code should be organised to be able to work with these subroutines. The routines would be implemented in the respective machines' native BASIC. These machine-specific implementations of the common standard were called BASCODER. Since BASIC used line numbers back then, one of the conventions said that the BASCODERs would reside in lines below 1000, and applications could start at that line only.

In other words, the BASICODE inventors defined an ABI of sorts, and built a set of virtual machines to support a platform-independent BASIC dialect. The interface included graphics, sound, and I/O. Sound familiar? I find it fascinating that this originated in the 8-bit home computing world, independently of contemporary developments like P-Code and Smalltalk.

Since I have a certain love for 8-bit computing (having learned programming on a Schneider PCW), I was of course interested in exploring BASICODE and the applications that people have built with it. For that, I needed to use the original hardware, install some kind of emulator, or run it in a browser. Neither of those options was particularly satisfying. Being a programming languages nerd, what could have been more likely than me setting out to build a BASICODE myself? So I did.

It's here, built in Java, and it's under the MIT licence.

The BASIC programming language interpreter is built using a grammar written in JavaCC. The interpreter is an AST interpreter. These are very easy to implement and understand, and don’t take much time to build. My objective with the whole thing is simplicity, not design perfection. (How about a sorry excuse for ugliness?)

The GUI is built using the basic Java features, AWT and Swing. I didn't want to include too many dependencies, to be honest. The parser generator aside.

The screen shot below is produced by an actual BASIC program running in the interpreter, producing output on the console. So cool.

Tags: the-nerdy-bit, hacking

A Free Cloud Offer for Personal Projects

For some personal experimentation projects, I’d like to use a virtual server somewhere out there. Since I have my personal domain (including this blog) hosted at Strato, I decided to use their offering, and got disappointed quickly. It costs money (not too little either), but the performance, reliability, and connectivity are rather bad. (10 seconds to establish an ssh connection? Come on.) Customer service offers the most basic helpfulness. So I closed that chapter.

Cloud providers looked like an interesting alternative. Google and AWS have good, industry-standard, offerings, and I had dabbled with them in the past in personal projects. Also, my previous and current companies use them.

But I was curious about my former employer, Oracle’s, cloud offering. They offer an “always free” tier that has some limited (of course) but interesting characteristics. After all, there are people running Minecraft servers in that tier, so it can’t be all weak and lame. In fact, the Ampere (ARM) server with up to 4 CPUs and 24 GB memory looks rather interesting.

Sign-up was weird but, given I know the place a bit from the inside, not too surprising. The sign-up confirmation page told me I’d receive an e-mail with the details within 15 minutes or so. Having not received that e-mail after several hours, I reached out to customer support, only to be told that approval for the provisioning had to be processed by several teams, which could take a while due to full queues. Yup, that’s typical bureaucratic Oracle, nothing unusual to see here.

Two days later, the account was provisioned in the German data center. I logged in, only to find a well structured and rather clear console. I worked my way through a tutorial for setting up a Docker environment, adapting it to the newer version of Oracle Linux I found in my VM, and it worked flawlessly. Performance was pleasant, ssh access instant. As mentioned, the console is fairly clear and usable.

What next? Should I set up that Minecraft server?

(Note that this isn't a paid ad at all.)

Tags: hacking

The Secret Life of a Mathematica Expression

The Mathematica software has been around for quite a while now. It pioneered notebooks (eat that, Jupyter) and was already pretty advanced when it first came out.

Most of the time, Mathematica is seen as that super powerful and rich environment for doing all things math. Which it is. What’s less appreciated is that it comes with a complete programming language that enables all these things. The language is based on just one fundamental principle, pattern matching. It may be a little awkward to appreciate that 3+4 isn’t just simply an addition, but involves some pattern matching before doing the computation.

A former colleague at Oracle Labs, David Leibs, has put together a nice (and increasingly nerdy) presentation on The Secret Life of a Mathematica Expression that I highly recommend. Be warned, it goes pretty deep on the semantics and how they can be used to build that big math brain extension called Mathematica. But it’s also highly instructive as a deep look into how a different and definitely not mainstream programming paradigm works, and how powerful it is.

Tags: the-nerdy-bit, hacking

Google Meet Hardware Support

You know the feeling: you’re in a Google Meet call, have some relevant things open in several browser tabs on the other monitor, you want to push some button in the Google Meet window (or leave it), but you first have to find that mouse pointer, move it over to the right window, navigate it to the right button, and finally, with an exasperated sigh, click it. Maybe there are keyboard shortcuts, but for those to work, you still have to bring the focus to the meeting window first. If only there was a thingamajig that could do the trick.

Turns out there is. Enter Stream Deck. (Unpaid ad.) Add to that a dedicated Chrome plugin for Google Meet, et voilà:

So this thing, which connects via USB, has 15 buttons with little LC displays in them that can be programmed to trigger just about any activity by using its standard app. It’s apparently popular amongst streamers, but why should they have all the fun?

The aforementioned Meet plugin is a dedicated thing that directly talks to Chrome’s implementation of WebHID. That’s beautiful because no device driver is needed. The plugin is very well done: it reconfigures the buttons depending on the context Google Meet is in. On the Meet home page, it will show buttons to enter the next scheduled meeting, and to start a new unscheduled one. In the meeting, it shows the buttons you can see in the photo above. They also change when pushed: for example, the image for the mute button (bottom left) will turn red and show a strike-through microphone image while muted; and the button for raising your hand will get a blue background while the hand is up.

Since I took the picture, the features have changed: the Stream Deck now displays more buttons for interacting with the meetings, e.g., switching on/off the emoji reaction bar, and so forth.

Occasionally, Google decides on a whim to change the DOM ID of some of the buttons on the Meet page. That means the respective buttons on the Stream Deck stop working. It's an easy fix, though: simply inspect the page elements with the Chrome built-in tooling, change the plugin JavaScript source in the right place, and reload the plugin.

All in all, this is a productivity booster.

Tags: work, hacking, the-nerdy-bit

Sharing Pages in Logseq

Logseq is a real power tool for note taking and knowledge management. I had mentioned I’m using it a while ago already.

I keep my personal logs strictly separate from work related logs, for obvious reasons. However, there is one important part of the knowledge graph that is good to have both in the work and personal hemispheres: my collection of notes about articles and books I read. I’m going to describe now how I’m ensuring I keep this particular info synchronised across the work and personal knowledge graphs.

Thankfully, Logseq has a very simple storage model: Markdown files in directories. That makes it easy to use an independent synchronisation tool like git to synchronise and share parts of the storage. Logseq stores all individual Markdown files in the pages directory. It also has a notion of “name spaces”, where pages can be grouped by prefixes separated by the / character. For example, an article I file under Articles/Redis Explained will have the file name Articles%2FRedis Explained.md - it really is as simple as that.

I’ve made the root directory of my Logseq storage a git repository. I have a .gitignore file that looks like this:

.logseq

.gitignore.swp

.DS_Store

journals/**

assets/**

logseq/**

pages/**

!.gitignore

!pages/Articles*

!pages/Books*As you can see, it excludes pretty much everything but then explicitly includes the things I want to share across machines: the .gitignore file itself, and any page that has an Articles or Books prefix. Occasionally, I will have to force-add a file in the assets directory, which is where images are stored. That’s manageable. The rest is git add, git commit, git push, and git pull, as usual.

With this setup, I can freely share notes about articles and books across my two Logseq graphs, and reference the articles and books from my personal and work notes easily.

Tags: the-nerdy-bit, hacking, work

Mobile IDE

iPads are nice, and the iPad Pro is a rather powerful laptop substitute - almost. While it’s really well possible to do office style work on an iPad, anything software engineering becomes hard, because IDEs involving compiling and running code on the device, as well as convenient things command line interfaces, aren’t supported on the platform. The manufacturer wants it so.

There are web IDEs. Unfortunately, they’re a bit frustrating to use when travelling (e.g., on a train in Germany, or on an airplane).

Raspberry Pi to the rescue. Of course. These machines are small, lightweight, don’t consume too much power, and are yet fairly capable as Linux computers. Real IDEs run on them. Compiling and running code on them isn’t a problem either.

The Tech Craft channel on YouTube has this neat overview of a mobile setup that combines the best of both worlds, using the Linux goodness of a Pi and the user interface of an iPad. It’s tempting.

Tags: work, the-nerdy-bit, hacking

Logseq

In May last year, I’ve started using Logseq for my note taking - both personal stuff and at work. I got interested because some people whose judgment in such matters I trust were quite enthusiastic about Logseq. Giving it a look couldn’t hurt, I thought.

Today, I’m still using it, and have already transferred some of my larger personal note collections to it. I keep discovering new features and possibilities, and let’s just say I’m hooked.

What do I like about it? A random collection:

At a high level, Logseq is a lightweight note taking and organisation tool with lots of pragmatic and sensible features.

Logseq keeps all the data on the local drive, in Markdown format, unencrypted, accessible.

The editor has built-in features for very swift linking from text in a page to external resources, other pages, sections in pages, and even down to single paragraphs (“bullets”).

Recurring structures can be easily reproduced using templates.

Pages can have alias names, making for nicer linking.

Logseq adds, atop the plain Markdown files, an index that allows for extremely swift searching. There’s a powerful advanced query and filtering capability, too.

It’s available on desktop, iOS, and Android.

There are numerous ways of synchronising across devices, including iCloud and git.

The tool is open source, and the monetisation model (yes, it has one, to sustain services and community) is extremely forthcoming: you pay as much as you want in a mode of your choice if you think it’s deserved. (It is.)

Logseq also has a graph visualisation of the page structure - I have yet to discover its true worth but it sure looks nice. A cross-device sync feature has been added and is available in beta mode for paying customers - I'm one of them, and it's pretty usable and stable already.

I have barely scratched the surface. There’s a plugin API allowing for all kinds of power-ups, automation is possible to a considerable extent, and so on, and so on. I believe Logseq is a true power tool.

Tags: the-nerdy-bit, hacking

Z80 Memories

Chris Fenton has built a machine. It’s a multi-core Z80 monster in a beautiful laser-cut wooden case. I have nothing but deep admiration for this kind of project and the drive that lets people drive and complete it. It also brings back a lot of memories.

The Z80 was the first CPU I learned how to program assembly for, back in the Nineties, on an Amstrad PCW. The operating system on that one was CP/M Plus, but you could also boot (boot!) a text processor named LocoScript. The programming language of choice ended up being Turbo Pascal 3.0.

What bothered me was that I couldn’t let stuff run “in parallel”, so I hacked my way into something resembling that. Pretty much all low-level functions would make calls to the operating system entry point, at memory address 0005. (The assembly instruction for that was CD 05 00. Yes, I still remember that.) So I inserted a jump table at that address that would call all the Pascal procedures I wanted to run in parallel before proceeding with the low-level operating system call.

I quickly figured out (the hard way) that those Pascal procedures better contain no calls to low-level operating system routines, because ... infinite loop. Oopsie.

Tags: the-nerdy-bit, hacking

Mini Lambda

Justine Tunney has built a plain lambda calculus interpreter that compiles down to just 383 (as of this writing) bytes of binary (on x86_64). That's Turing completeness in just under 400 bytes of machine code, and by default "instant awesome". The documentation is extensive, contains lots of examples, and such gems as a compiler from a symbolic representation of lambda calculus to the interpreter's binary input format using sed. If you have any interest in minimal abstraction, do yourself a favour and check this out please.

Tags: hacking, the-nerdy-bit

Mature Programming Language Ecosystems

I used to work a lot with, and on, Java, so I have a soft spot for that language and ecosystem. One specific point I've come to realise while dabbling with some tech and reading about log4j problems over the past months is that a rich standard library (like the one that's part of Java) can make you a lot of days. The following can easily be misunderstood as flamebait. Please don't.

The log4j misery could have been avoided - the Java standard library has a built-in logging facility since JDK 1.8; and a capability for remote code execution simply isn't needed in a logging library.

Dependencies can be tricky. On Windows, there used to be DLL hell; today, we have npm dependencies that have a tendency to go really awry. Yes, Java has its issues too, when there are hard-to-resolve conflicts between dependencies managed by Maven, for instance. But back to npm. The JavaScript language is very small, and it and Node.js don't come with a very rich standard library. Consequently, many "standard" things end up being pulled in as dependencies through npm. Also, everybpdy (and then some) thinks it a good idea to release their particular solution to a recurring problem as an npm module.

The maze of dependencies, sometimes conflicting licences, and outdated or insecure code becomes ever harder to navigate, leading to yet more software being built to help developers and companies (who don't want to lose lots of money in licencing or software security lawsuits) to handle the complexity. That means there's businesses flourishing on the fallibilities of the ecosystem, rather than fixing those.

Sometimes modules are pulled "just like that" (because the developers can), and sometimes this happens for the worst reasons, e.g., because a developer cannot make a living from software they hand out for free after their apartment burned down. This points to a deeper problem with open-source software: it's taken for granted. And if a maintainer doesn't have a company behind them that helps with paying the bills, it's a precarious gratitude those developers are showing.

Libraries and dependencies growing out of proportion is an issue that can be addressed by relying on an ecosystem that comes with a rich standard library to begin with. Java is at the heart of one such ecosystem, and it's being maintained and developed in a very sane and transparent process, by a very capable and mature community. Some big industry players are part of that community, and fund a lot of the work. I'm using Java as one example - there are others.

What's my point? There are several:

When choosing technology that's meant to run a business, erring on the side of true-and-tried ecosystems with rich standard libraries and robust buy-in is safer.

Where vivid open-source technology is used, consider funding it in addition to using it, to have a visible stake.

Technology should be chosen for the right reasons. Therefore, it doesn't need to be hip. It needs to work, reliably and sustainably.

Working with true-and-tried (some might say "old and boring") technology does not substitute supporting research into new, innovative things that can be the true-and-tried ones ten years from now.

Reply-All

(Warning: maybe because it's 1 April, the post below contains a bit of irony. When you find it, feel free to keep it.)

You've seen them - congratulatory e-mails flooding your inbox, even if you're not on the receiving end of the celebration but merely a member of the cheering crowd. Thanks to "reply-all", they happen. I personally don't mind them much, but some people do take mild offence in being faced with the challenge of having to mark swathes of "congrats!" messages as read.

There's something to be said for both sides here. On the one hand, such a broadcast message, e.g., a promotion announcement, can be seen as primarily meant to notify the crowd of the news. Reply with heartfelt congrats, rejoice in the fact, cool, move on. On the other, the broadcast does have a social aspect to it in that it encourages the crowd to cheer, and he cheering gets amplified by itself.

Of course, there's an easy remedy. Instead of putting all recipients on CC, putting them on BCC and keeping just the intended recipient of the congratulations in the "To:" field will reduce the recipients of the "reply-all" flood to just the subject of the celebration and their manager (or whoever sends the message). The parties put on BCC can be mentioned in the message, for transparency.

I sense an actual research question in this: Will CC-reply-all flooding incentivise more people to congratulate the person, or will the ones who want to do this do it anyway? Is there an amplification effect in "reply-all"? If so, what does it amplify more, cheering, or grumbling? Does BCC-messaging have a contrary effect?

Who's in for doing an empirical study?

Tags: work, hacking, the-nerdy-bit

Contemporary CPU Architecture

Building a CPU (or other hardware device) emulator is a fun endeavour - I've built myself half a Z80 emulator in Smalltalk once, test-driven development and all.

However, writing comparatively low-level code in the 21st century is really old-school. You have to use contemporary technology for everything now. That includes building emulators.

Consequently, David Tyler has built an 8080 emulator complete with computer and CP/M operating system to run on the CPU. It being "today", he has of course applied what is en vogue. That means microservices (one for each opcode supported by the CPU, just in case you were wondering), Docker, and the like. It's super hilarious. In fact, it's a more than valid successor to this wonderful enterprise Java implementation of FizzBuzz.

Tags: hacking, the-nerdy-bit

Hammerspoon

By chance, I came across Hammerspoon a while ago. This is a pretty amazing tool for Mac automation. It hooks right into a (still growing) number of the macOS APIs and allows for fine-grained control. It also offers numerous event listeners that allow for reacting to things happening, e.g., signing on to a certain WiFi network, connecting a specific external monitor, battery charge dropping below a certain threshold, and so forth. It's also possible to define hotkeys for just about everything. Use Cmd-Option-Ctrl-B to open a URL copied to the clipboard in the tracking-quenching Brave Browser? You got it:

hs.hotkey.bind({"cmd", "alt", "ctrl"}, "B", function()

local url = hs.pasteboard.readString()

hs.applescript('tell Application "Brave Browser" to open location "' .. url .. '"')

end)The goodness is brought about by the Lua scripting language. Lua is very lightweight and yet powerful, and easy to learn. Oh, and Hammerspoon has some very good API documentation, too.

Tags: hacking

Writing Documentation

This article argues that there are mainly two reasons why developers don't (often, usually) (like to) write documentation. Firstly, writing is hard, and secondly, not documenting doesn't block shipping. There are also some remarks on the value of documentation, and advice on how to go about ensuring there is decent documentation after all. I don't disagree with any of that, but that's not the point. I would like to expand a bit on the writing documentation is hard topic.

Back when I was working on JEP 274, which eventually made it into Java 9 in the form of a chunk of public API in the OpenJDK standard library, I wrote a lot of API documentation. This was a necessity because other implementers of the Java API, such as IBM, were supposed to be 100 % compatible but were, for legal reasons, not allowed to look at the implementation behind the API. So, that API documentation had better be rather darn accurate.

The centerpiece of JEP 274, a method used to construct loops from method handles, has a complex but nifty abstraction of loops at its core. (I fondly recall the whiteboard session where several of the wonderful Java Platform Group colleagues at Oracle designed the "mother of all loops".) When I had completed the first version of this, I put it out there for review, and started collaborating on the project with an excellent colleague, named Anastasiya, from the TCK group, located in St Petersburg, Russia.

It was Anastasiya's job to ensure the API documentation and implementation were aligned. She took the API documentation - which I was quite fond of already - and wrote unit tests for literally every single comma in the documentation. Sure enough, things started breaking left and right. During our collaboration, I learned a lot about corner cases, off-by-one errors, and unclear language. In a nutshell, my implementation was full of such issues, and the API documentation I thought so apt was not exactly useless, but irritating in many ways.

Anastasiya and I worked very closely for several months, and eventually, the documentation was not only in line with what the implementation did, but also precise enough to be usable by our friends at IBM.

Point being? Writing good documentation is indeed very, very hard, and I couldn't have done this without Anastasiya's help. Without her, I'd have ended up shipping public Java API - code running on dozens if not hundreds of millions of devices worldwide - in a really bad state. I'm still very grateful.

Array Programming

There are multiple programming paradigms. Object-oriented and functional programming are the most popular ones these days, and logic programming has at least some niche popularity (ever used Prolog? it's interesting). Put simply, the paradigm denotes the primary means of abstraction in languages representing it. Classes and objects, (higher-order) functions, as well as facts and deduction are constituents of the three aforementioned paradigms, and no news to users thereof.

Also, there's array programming. Here, arrays are the key abstraction, and all operations apply to arrays in a transparent and elegant way. The paradigm is visible in many of the popular programming languages in the form of libraries, e.g., Numpy for Python.

Array programming also has languages representing it that put "the array" at the core and bake the array functionality right into the language semantics. "Baked in" here means that the loopy behaviour normally encountered when dealing with arrays isn't visible: there are operations that apply the loops transparently, and even support implicit parallelisation of operations where possible. One of the more widely known languages of this kind is R, which is rather popular in statistics.

All of array programming stems from a programming language coined in the 1950s named "A Programming Language" (not kidding), and abbreviated APL. This language is most wonderful.

APL's syntax is extremely terse - many complex operations are represented by just one single character. As a very simple example, consider two arrays a and b with the contents 1 2 3 and 4 5 6. Adding these pairwise is simple: a + b will yield 5 7 9. Look ma, no loops. As another example, taking the sum of the elements of an array is done by using the + operation and modifying it into a fold using /: +/a will yield 6.

You see where this leads. APL code is very condensed and low on entropy. Many complex computations involving arrays and matrices can literally be expressed as one-liners. John Scholes produced a wonderful video developing a one-line implementation of Conway's Game of Life.

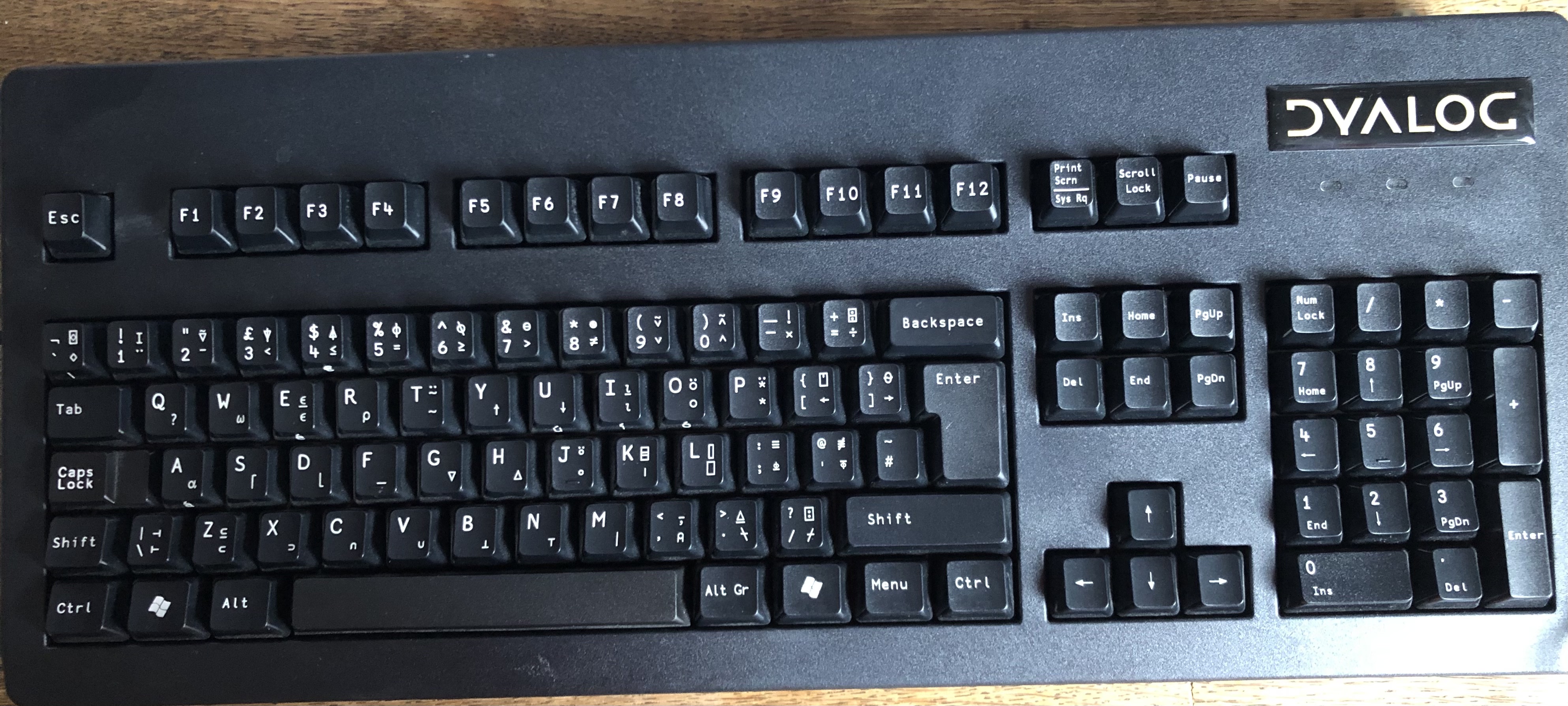

Indeed, there are numerous special characters in the APL syntax, and IBM used to produce dedicated keyboards and Selectric typeballs for the character set. To this day, it's still possible to buy APL keyboards. Here's mine. Look at all the squiggly characters!

After making my way through an APL book, I wanted to write a little something on my own, and ended up doing a BASE64 encoder/decoder pair of functions. They're both one-liners, of course. Admittedly, they're long lines, but still. I've even documented how they work. Looky here.

While you may wonder about the practicability of such a weird language, be assured that there's a small but strong job market. Insurance companies and banks are among the customers of companies like Dyalog, which build and maintain APL distributions. Dyalog APL is available for free for non-commercial use.

Tags: hacking

One-Letter Programming Languages

This page about one-letter programming languages is absolute gold. I hadn't realised what a lot of them there is out there.

I've been using some of them. C, of course. J, K, and Q are all APL descendants that I was using to improve my understanding of array programming when working on an implementation of R, which I've obviously also used.

Some of these languages are cranky, but "one letter" doesn't imply crankiness per se.

Tags: hacking

Code Pwnership

code pwnership: being in charge of a code base multiple clients depend on, having exclusive commit and release rights, and totally refusing to fulfil requests or consider pull requests.

Tags: the-nerdy-bit, work, hacking

Forth

Programmed in Forth ever you have?

If you chuckled at that, the answer is probably "yes".

Forth is the programming language that makes you code the way Yoda talks. Point being, you push values on a stack and eventually execute an operation, the result of which will replace the consumed elements on the stack. The arithmetic operation 3+4*5 could be expressed by saying 4 5 * 3 + (to really really honour evaluating multiplication before addition). It could equally well (and a bit more idiomatically) be expressed as 3 4 5 * +: 4 and 5 will be the topmost elements of the stack after 3 4 5, and * takes the two topmost elements. Either way, the result will be 23.

This sounds appropriately nerdy, and you might wonder what the heck this is all about. The thing is, this simple convention makes for very efficient and compact implementations of the language, and it's still quite popular in embedded systems such as the Philae comet lander. If your computer runs on Open Firmware, you have a little Forth interpreter right at the bottom of your tech stack.

The Factor programming language is a direct descendant of Forth, and comes with a really powerful integrated development environment, as well as with a just-in-time compiler written in Factor itself. The IDE has executable documentation, making it similar in explorable power to Smalltalk and Mathematica.

Tags: hacking

Mostly Functions

"Write code. Not too much. Mostly functions."

I agree. Note that this can be easily misunderstood as one of those stupid pieces that pitch one particular programming paradigm against all the others. Don't take it that way.

Object-oriented abstractions have as much a justification as have functional ones (and don't get me started about all the other paradigms). Each has its place, and functions are a very good abstraction for, well, functionality, while objects are a good one for state.

Modifying state is a necessity, and those bits should be tucked away safely in very disciplined places to avoid the headaches that arise when parallelism comes into play. All the rest should be expressed in functions that are as pure as possible - same input, same output - to allow for clearer reasoning about what is going on. Programming languages usually allow to work that way.

Tags: hacking

Self-Documenting Code

Self-documenting code is a dream that rarely comes true. Most of the time, self-documentation goodness applies well at the level of individual functions or methods, but it gets more tricky when entire classes or modules and their interactions come into play. Frankly, it is vastly more relevant to document their intent than the one of functions or methods (which can be read in their entirety quickly). Here is another case for putting meaningful comments in code, from a somewhat unexpected angle: they work better than PR comments to document code's purposes.

Tags: hacking

Laptop Purchase

A while ago, I backed the CrowdSupply campaign for Reform, an open-source laptop. There's a small Berlin based company behind this, the founder of which, Lukas F. Hartmann, is a genius. He likes to build things from scratch in an open source fashion. One example is an operating system. Another is the Reform laptop. Lukas and friends have designed the entire machine from the ground up, PCB layout, keyboard, case, and all that. The open source philosophy goes as far as making 3D printing instructions for the case parts available. The laptop is a bit clunky, but completely maintainable (no hardwired battery!). It's also a bit pricey and comes with little memory, but the idea as such has my greatest sympathy.

Future editions of the laptop might go even further open source. The current processor is still based on (proprietary) ARM architecture, but Lukas is already thinking about a RISC-V based machine.

In backing the campaign, I chose the package that involves me assembling the laptop from its parts. This is going to be so much fun. I've also agreed with Lukas to save him the shipping cost, and will pick up the box in person in Berlin, hopefully in December.

Tags: hacking, the-nerdy-bit

Bashblog Support for "Finished" Markdown Files

So Mitch Wyle told me a while back that he likes to use the e-mail inbox feature of the Blogger platform: write your posting, send it to that e-mail address, and have it posted. That works well on an airplane - all postings will go live once the mobile phone as connectivity again.

Since I use bashblog, which isn't really a hosting service, adding e-mail will be a bit of a stretch, but there could be a different solution.

I host my blog in a private GitHub repository anyway. So how about a workflow that involves, towards the end, pulling in a Markdown file I store in some branch, putting it through bashblog, and posting it?

Now, bashblog is a bit adverse to simply processing files without its edit loop. But that's fine. I've added a publish command to bashblog that allows for just that. It may be buggy (I'm not too well versed in shell programming), and I'll be grateful for suggestions for improvement.

While the processing (pulling from GitHub, applying bashblog, uploading to the webspace) can be done by another Raspberry Pi at home that runs a cron job, I'm also painfully aware that the first steps of the workflow are still unclear.

How do I get that Markdown file onto GitHub, say, when I'm sitting on an airplane? Can I send GitHub an e-mail with the contents (push-by-mail)? Can I use the GitHub app in offline mode? More things to try.

Tags: hacking

bashblog and MacVIM

My favourite text editor is MacVIM, so it's the logical choice to use this one for editing the Markdown sources for these bashblog blog postings. MacVIM also comes with a command line tool, mvim, that can be used to start editing in the GUI from the shell.

There's just one caveat when using mvim as the EDITOR for bashblog: mvim is a shell script that will start the MacVIM application using exec, so that it terminates when it hands over control to the editor. For bashblog, termination of the EDITOR command means to save the source file and generate HTML.

Since the source file is initially generated containing a template, the filename will be title-on-this-line.html, and the file will contain just that: the template. To actually generate the desired blog posting, one has to re-enter the editor and save again.

There's a simple hack that doesn't involve modifying either bb.sh or mvim: simply set EDITOR="mvim -f" in bashblog's .config file. The -f argument will keep the mvim script from terminating.

Tags: hacking